Redis并发

- IO多路复用;

- 后台线程;

- 多线程;

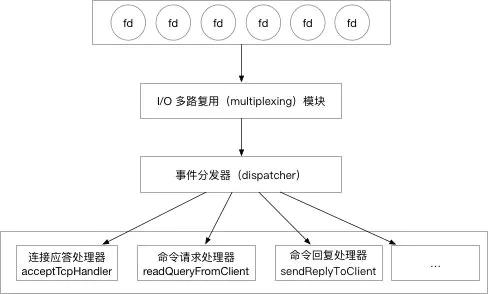

1、IO多路复用

Redis采用单线程IO多路复用来实现高并发,即注明的Reactor单线程模式。具体的可以看我之前的文章: Java的NIO及Netty 。

Redis的IO多路复用机制可以选择使用很多种,比如select,epoll,kqueue,evport等。关于IO多路复用的可以参考这篇文章: 并发编程之I/O多路复用及几种I/O模式

接下来看看Redis的实现。先看启动吧,即src/server.c的main函数。这个函数会进行环境设置、初始化参数、数据恢复等等一系列操作。

int main(int argc, char **argv) {

struct timeval tv;

int j;

char config_from_stdin = 0;

initServer();

......

moduleInitModulesSystemLast();

moduleLoadFromQueue();

ACLLoadUsersAtStartup();

InitServerLast();

loadDataFromDisk();

aeMain(server.el);

aeDeleteEventLoop(server.el);

return 0;

}initServer创建了事件循环,并创建了两种事件,一个是文件事件,一个是定时事件。定时事件主要用于处理后台任务。

server.el = aeCreateEventLoop(server.maxclients+CONFIG_FDSET_INCR);

/* Create the timer callback, this is our way to process many background

* operations incrementally, like clients timeout, eviction of unaccessed

* expired keys and so forth. */

if (aeCreateTimeEvent(server.el, 1, serverCron, NULL, NULL) == AE_ERR) {

serverPanic("Can't create event loop timers.");

exit(1);

}

/* Create an event handler for accepting new connections in TCP and Unix

* domain sockets. */

for (j = 0; j < server.ipfd_count; j++) {

if (aeCreateFileEvent(server.el, server.ipfd[j], AE_READABLE,

acceptTcpHandler,NULL) == AE_ERR)

{

serverPanic(

"Unrecoverable error creating server.ipfd file event.");

}

}循环事件创建过程:

aeEventLoop *aeCreateEventLoop(int setsize) {

aeEventLoop *eventLoop;

int i;

monotonicInit(); /* just in case the calling app didn't initialize */

if ((eventLoop = zmalloc(sizeof(*eventLoop))) == NULL) goto err;

eventLoop->events = zmalloc(sizeof(aeFileEvent)*setsize);

eventLoop->fired = zmalloc(sizeof(aeFiredEvent)*setsize);

if (eventLoop->events == NULL || eventLoop->fired == NULL) goto err;

eventLoop->setsize = setsize;

eventLoop->timeEventHead = NULL;

eventLoop->timeEventNextId = 0;

eventLoop->stop = 0;

eventLoop->maxfd = -1;

eventLoop->beforesleep = NULL;

eventLoop->aftersleep = NULL;

eventLoop->flags = 0;

if (aeApiCreate(eventLoop) == -1) goto err;

/* Events with mask == AE_NONE are not set. So let's initialize the

* vector with it. */

for (i = 0; i < setsize; i++)

eventLoop->events[i].mask = AE_NONE;

return eventLoop;

err:

if (eventLoop) {

zfree(eventLoop->events);

zfree(eventLoop->fired);

zfree(eventLoop);

}

return NULL;

}创建文件事件:

int aeCreateFileEvent(aeEventLoop *eventLoop, int fd, int mask,

aeFileProc *proc, void *clientData)

{

if (fd >= eventLoop->setsize) {

errno = ERANGE;

return AE_ERR;

}

aeFileEvent *fe = &eventLoop->events[fd];

if (aeApiAddEvent(eventLoop, fd, mask) == -1)

return AE_ERR;

fe->mask |= mask;

if (mask & AE_READABLE) fe->rfileProc = proc;

if (mask & AE_WRITABLE) fe->wfileProc = proc;

fe->clientData = clientData;

if (fd > eventLoop->maxfd)

eventLoop->maxfd = fd;

return AE_OK;

}

至此,Server初始化基本完成。

接着执行aeMain过程,传入的时间循环:

void aeMain(aeEventLoop *eventLoop) {

eventLoop->stop = 0;

while (!eventLoop->stop) {

aeProcessEvents(eventLoop, AE_ALL_EVENTS|

AE_CALL_BEFORE_SLEEP|

AE_CALL_AFTER_SLEEP);

}

}

aeProcessEvents处理各种事件。文件事件会调用acceptTCPHandler.此外也会调用beforeSleep.

int aeProcessEvents(aeEventLoop *eventLoop, int flags)

{

int processed = 0, numevents;

/* Nothing to do? return ASAP */

if (!(flags & AE_TIME_EVENTS) && !(flags & AE_FILE_EVENTS)) return 0;

/* Note that we want call select() even if there are no

* file events to process as long as we want to process time

* events, in order to sleep until the next time event is ready

* to fire. */

if (eventLoop->maxfd != -1 ||

if (eventLoop->beforesleep != NULL && flags & AE_CALL_BEFORE_SLEEP)

eventLoop->beforesleep(eventLoop);

for (j = 0; j < numevents; j++) {

aeFileEvent *fe = &eventLoop->events[eventLoop->fired[j].fd];

/* Fire the writable event. */

if (fe->mask & mask & AE_WRITABLE) {

if (!fired || fe->wfileProc != fe->rfileProc) {

fe->wfileProc(eventLoop,fd,fe->clientData,mask);

fired++;

}

}

/* If we have to invert the call, fire the readable event now

* after the writable one. */

if (invert) {

fe = &eventLoop->events[fd]; /* Refresh in case of resize. */

if ((fe->mask & mask & AE_READABLE) &&

(!fired || fe->wfileProc != fe->rfileProc))

{

fe->rfileProc(eventLoop,fd,fe->clientData,mask);

fired++;

}

}

processed++;

}

}

1、acceptTCPHandler

void acceptTcpHandler(aeEventLoop *el, int fd, void *privdata, int mask) {

int cport, cfd, max = MAX_ACCEPTS_PER_CALL;

char cip[NET_IP_STR_LEN];

UNUSED(el);

UNUSED(mask);

UNUSED(privdata);

while(max--) {

cfd = anetTcpAccept(server.neterr, fd, cip, sizeof(cip), &cport);

if (cfd == ANET_ERR) {

if (errno != EWOULDBLOCK)

serverLog(LL_WARNING,

"Accepting client connection: %s", server.neterr);

return;

}

serverLog(LL_VERBOSE,"Accepted %s:%d", cip, cport);

acceptCommonHandler(connCreateAcceptedSocket(cfd),0,cip);

}

}2、然后执行acceptCommonHandler

static void acceptCommonHandler(connection *conn, int flags, char *ip) {

client *c;

char conninfo[100];

UNUSED(ip);

/* Create connection and client */

if ((c = createClient(conn)) == NULL) {

serverLog(LL_WARNING,

"Error registering fd event for the new client: %s (conn: %s)",

connGetLastError(conn),

connGetInfo(conn, conninfo, sizeof(conninfo)));

connClose(conn); /* May be already closed, just ignore errors */

return;

}

/* Last chance to keep flags */

c->flags |= flags;

/* Initiate accept.

*

* Note that connAccept() is free to do two things here:

* 1. Call clientAcceptHandler() immediately;

* 2. Schedule a future call to clientAcceptHandler().

*

* Because of that, we must do nothing else afterwards.

*/

if (connAccept(conn, clientAcceptHandler) == C_ERR) {

char conninfo[100];

if (connGetState(conn) == CONN_STATE_ERROR)

serverLog(LL_WARNING,

"Error accepting a client connection: %s (conn: %s)",

connGetLastError(conn), connGetInfo(conn, conninfo, sizeof(conninfo)));

freeClient(connGetPrivateData(conn));

return;

}

}3、创建连接客户端

client *createClient(connection *conn) {

client *c = zmalloc(sizeof(client));

/* passing NULL as conn it is possible to create a non connected client.

* This is useful since all the commands needs to be executed

* in the context of a client. When commands are executed in other

* contexts (for instance a Lua script) we need a non connected client. */

if (conn) {

connNonBlock(conn);

connEnableTcpNoDelay(conn);

if (server.tcpkeepalive)

connKeepAlive(conn,server.tcpkeepalive);

connSetReadHandler(conn, readQueryFromClient);

connSetPrivateData(conn, c);

}

selectDb(c,0);

uint64_t client_id;

//每个客户端唯一的id

atomicGetIncr(server.next_client_id, client_id, 1);

c->id = client_id;

c->resp = 2;

c->conn = conn;

.......

initClientMultiState(c);

return c;

}

上面首先创建了一个读事件

connSetReadHandler(conn, readQueryFromClient);

4、从客户端读

readQueryFromClient

最终会调用processCommand执行命令:

int processCommandAndResetClient(client *c) {

int deadclient = 0;

server.current_client = c;

if (processCommand(c) == C_OK) {

commandProcessed(c);

}

if (server.current_client == NULL) deadclient = 1;

server.current_client = NULL;

/* performEvictions may flush slave output buffers. This may

* result in a slave, that may be the active client, to be

* freed. */

return deadclient ? C_ERR : C_OK;

}

2、后台线程

自Redis4.0之后,Redis增加了异步线程的支持,使得一些比较耗时的任务可以在后台异步线程执行,不必再阻塞主线程。之前del和flush等都会阻塞主线程,现在的ulink,flushall flushdb等操作都不会再阻塞。

异步线程是通过Redis的bio实现,即Background I/O。

Redis在启动时,在后台会初始化三个后台线程。

void InitServerLast() {

bioInit();

initThreadedIO();

set_jemalloc_bg_thread(server.jemalloc_bg_thread);

server.initial_memory_usage = zmalloc_used_memory();

}bioInit就是具体启动后台线程过程。启动的线程主要包括:

#define BIO_CLOSE_FILE 0 /* Deferred close(2) syscall. */

#define BIO_AOF_FSYNC 1 /* Deferred AOF fsync. */

#define BIO_LAZY_FREE 2 /* Deferred objects freeing. */即关闭文件描述佛的,AOF持久化的,以及惰性删除。这三个线程时完全独立的,互不干涉。每个线程都会有一个工作队列,用于生产和消费任务。在添加和移除队列中都会加锁,防止并发问题。

for (j = 0; j < BIO_NUM_OPS; j++) {

void *arg = (void*)(unsigned long) j;

if (pthread_create(&thread,&attr,bioProcessBackgroundJobs,arg) != 0) {

serverLog(LL_WARNING,"Fatal: Can't initialize Background Jobs.");

exit(1);

}

bio_threads[j] = thread;

}主线程负责把相关任务添加到对应线程的队列中。比如惰性删除的过程,即我们使用UNLINK,flushDB,flushall等命令时。

//添加任务到对应线程队列中,添加过程会加锁

void bioCreateBackgroundJob(int type, void *arg1, void *arg2, void *arg3) {

struct bio_job *job = zmalloc(sizeof(*job));

job->time = time(NULL);

job->arg1 = arg1;

job->arg2 = arg2;

job->arg3 = arg3;

pthread_mutex_lock(&bio_mutex[type]);

listAddNodeTail(bio_jobs[type],job);

bio_pending[type]++;

pthread_cond_signal(&bio_newjob_cond[type]);

pthread_mutex_unlock(&bio_mutex[type]);

}不要担心后台删除会出现幻读的问题,主线程首先会删除对应keyspace,接下来的命令都访问不了了。后台线程负责清理对象。看下具体代码“

int dbAsyncDelete(redisDb *db, robj *key) {

/* Deleting an entry from the expires dict will not free the sds of

* the key, because it is shared with the main dictionary. */

if (dictSize(db->expires) > 0) dictDelete(db->expires,key->ptr);

/* If the value is composed of a few allocations, to free in a lazy way

* is actually just slower... So under a certain limit we just free

* the object synchronously. */

//同步删除keyspace或者直接同步清除

dictEntry *de = dictUnlink(db->dict,key->ptr);

if (de) {

robj *val = dictGetVal(de);

size_t free_effort = lazyfreeGetFreeEffort(val);

/* If releasing the object is too much work, do it in the background

* by adding the object to the lazy free list.

* Note that if the object is shared, to reclaim it now it is not

* possible. This rarely happens, however sometimes the implementation

* of parts of the Redis core may call incrRefCount() to protect

* objects, and then call dbDelete(). In this case we'll fall

* through and reach the dictFreeUnlinkedEntry() call, that will be

* equivalent to just calling decrRefCount(). */

if (free_effort > LAZYFREE_THRESHOLD && val->refcount == 1) {

atomicIncr(lazyfree_objects,1);

bioCreateBackgroundJob(BIO_LAZY_FREE,val,NULL,NULL);

dictSetVal(db->dict,de,NULL);

}

}

/* Release the key-val pair, or just the key if we set the val

* field to NULL in order to lazy free it later. */

if (de) {

dictFreeUnlinkedEntry(db->dict,de);

if (server.cluster_enabled) slotToKeyDel(key->ptr);

return 1;

} else {

return 0;

}

}

接下来就是后台线程会从对应队列中取出任务执行:

void *bioProcessBackgroundJobs(void *arg) {

struct bio_job *job;

unsigned long type = (unsigned long) arg;

sigset_t sigset;

/* Check that the type is within the right interval. */

if (type >= BIO_NUM_OPS) {

serverLog(LL_WARNING,

"Warning: bio thread started with wrong type %lu",type);

return NULL;

}

switch (type) {

case BIO_CLOSE_FILE:

redis_set_thread_title("bio_close_file");

break;

case BIO_AOF_FSYNC:

redis_set_thread_title("bio_aof_fsync");

break;

case BIO_LAZY_FREE:

redis_set_thread_title("bio_lazy_free");

break;

}

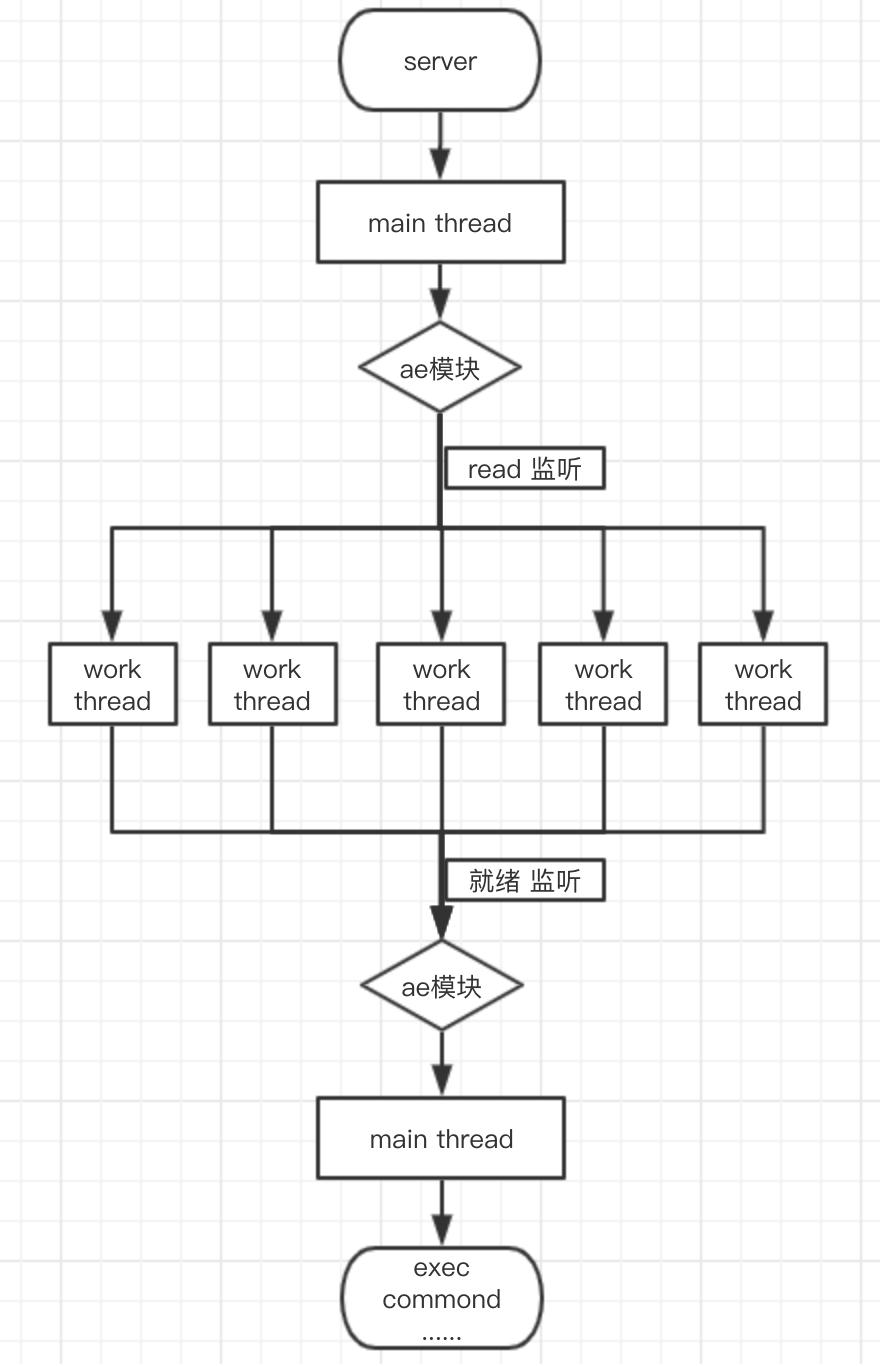

3、多线程

上面已经说了。Redis主要采用单线程IO多路复用实现高并发。后来为了处理耗时比较长的任务,Redis4.0引入了BackGround I/O线程。本身Redis的瓶颈并不是在于CPU,而是内存和网络IO。在一定程度上通过扩容即可。但是业务量不断扩大时,网络IO的瓶颈就体现出来了。这个时候使用多线程处理还是挺香的,充分发挥多核的优势。Redis6.0引入了多线程。

不过,这里强调一点,Redis引入了多线程,但也仅仅是用来网络读写,Redis命令的操作还是通过主线程顺序执行。这主要是为了减少Redis操作的复杂度等方面。

上面再说启动Background IO时,说到了InitServerLast,里面有个initThreadedIO,这个就是初始化线程IO的过程。

初始化线程IO的实现:

void initThreadedIO(void) {

server.io_threads_active = 0; /* We start with threads not active. */

/* Don't spawn any thread if the user selected a single thread:

* we'll handle I/O directly from the main thread. */

if (server.io_threads_num == 1) return;

if (server.io_threads_num > IO_THREADS_MAX_NUM) {

serverLog(LL_WARNING,"Fatal: too many I/O threads configured. "

"The maximum number is %d.", IO_THREADS_MAX_NUM);

exit(1);

}

/* Spawn and initialize the I/O threads. */

for (int i = 0; i < server.io_threads_num; i++) {

/* Things we do for all the threads including the main thread. */

io_threads_list[i] = listCreate();

//第一个是主线程,创建完continue

if (i == 0) continue; /* Thread 0 is the main thread. */

/* Things we do only for the additional threads. */

//worker线程注册回调函数。

pthread_t tid;

pthread_mutex_init(&io_threads_mutex[i],NULL);

setIOPendingCount(i, 0);

pthread_mutex_lock(&io_threads_mutex[i]); /* Thread will be stopped. */

//创建线程,并注册回调函数IOThreadMain

if (pthread_create(&tid,NULL,IOThreadMain,(void*)(long)i) != 0) {

serverLog(LL_WARNING,"Fatal: Can't initialize IO thread.");

exit(1);

}

io_threads[i] = tid;

}

}看上面代码,第一个是主线程,其他的都是Worker Thread。

一个图形可以反映上面的实现:

看一下他是咋实现多线程处理的。

其实请求处理流程还是和上面的一致,只是在readQueryFromClient有所不同。

void readQueryFromClient(connection *conn) {

client *c = connGetPrivateData(conn);

int nread, readlen;

size_t qblen;

/* Check if we want to read from the client later when exiting from

* the event loop. This is the case if threaded I/O is enabled. */

if (postponeClientRead(c)) return;

...

}如果是开启了线程IO,PostoneClientRead会把时间加入到队列中,待主线程分配给工作线程执行。

/* Return 1 if we want to handle the client read later using threaded I/O.

* This is called by the readable handler of the event loop.

* As a side effect of calling this function the client is put in the

* pending read clients and flagged as such. */

int postponeClientRead(client *c) {

if (server.io_threads_active &&

server.io_threads_do_reads &&

!ProcessingEventsWhileBlocked &&

!(c->flags & (CLIENT_MASTER|CLIENT_SLAVE|CLIENT_PENDING_READ)))

{

c->flags |= CLIENT_PENDING_READ;

listAddNodeHead(server.clients_pending_read,c);

return 1;

} else {

return 0;

}

}接着,在beforeSleep中会调用处理函数,多线程处理read操作。

int handleClientsWithPendingReadsUsingThreads(void) {

if (!server.io_threads_active || !server.io_threads_do_reads) return 0;

int processed = listLength(server.clients_pending_read);

if (processed == 0) return 0;

if (tio_debug) printf("%d TOTAL READ pending clients\n", processed);

/* Distribute the clients across N different lists. */

listIter li;

listNode *ln;

listRewind(server.clients_pending_read,&li);

int item_id = 0;

while((ln = listNext(&li))) {

client *c = listNodeValue(ln);

int target_id = item_id % server.io_threads_num;

listAddNodeTail(io_threads_list[target_id],c);

item_id++;

}

/* Give the start condition to the waiting threads, by setting the

* start condition atomic var. */

io_threads_op = IO_THREADS_OP_READ;

for (int j = 1; j < server.io_threads_num; j++) {

int count = listLength(io_threads_list[j]);

setIOPendingCount(j, count);

}

/* Also use the main thread to process a slice of clients. */

listRewind(io_threads_list[0],&li);

while((ln = listNext(&li))) {

client *c = listNodeValue(ln);

readQueryFromClient(c->conn);

}

listEmpty(io_threads_list[0]);

/* Wait for all the other threads to end their work. */

while(1) {

unsigned long pending = 0;

for (int j = 1; j < server.io_threads_num; j++)

pending += getIOPendingCount(j);

if (pending == 0) break;

}

if (tio_debug) printf("I/O READ All threads finshed\n");

/* Run the list of clients again to process the new buffers. */

while(listLength(server.clients_pending_read)) {

ln = listFirst(server.clients_pending_read);

client *c = listNodeValue(ln);

c->flags &= ~CLIENT_PENDING_READ;

listDelNode(server.clients_pending_read,ln);

if (c->flags & CLIENT_PENDING_COMMAND) {

c->flags &= ~CLIENT_PENDING_COMMAND;

if (processCommandAndResetClient(c) == C_ERR) {

/* If the client is no longer valid, we avoid

* processing the client later. So we just go

* to the next. */

continue;

}

}

processInputBuffer(c);

}

/* Update processed count on server */

server.stat_io_reads_processed += processed;

return processed;

}

主线程阻塞等待所有的工作线程都完成之后,将数据一同

下面是work线程的主要处理逻辑:

void *IOThreadMain(void *myid) {

/* The ID is the thread number (from 0 to server.iothreads_num-1), and is

* used by the thread to just manipulate a single sub-array of clients. */

long id = (unsigned long)myid;

char thdname[16];

snprintf(thdname, sizeof(thdname), "io_thd_%ld", id);

redis_set_thread_title(thdname);

redisSetCpuAffinity(server.server_cpulist);

makeThreadKillable();

while(1) {

/* Wait for start */

for (int j = 0; j < 1000000; j++) {

if (getIOPendingCount(id) != 0) break;

}

/* Give the main thread a chance to stop this thread. */

if (getIOPendingCount(id) == 0) {

pthread_mutex_lock(&io_threads_mutex[id]);

pthread_mutex_unlock(&io_threads_mutex[id]);

continue;

}

serverAssert(getIOPendingCount(id) != 0);

if (tio_debug) printf("[%ld] %d to handle\n", id, (int)listLength(io_threads_list[id]));

/* Process: note that the main thread will never touch our list

* before we drop the pending count to 0. */

listIter li;

listNode *ln;

listRewind(io_threads_list[id],&li);

//处理读写

while((ln = listNext(&li))) {

client *c = listNodeValue(ln);

//如果是写,写入并返回给客户端

if (io_threads_op == IO_THREADS_OP_WRITE) {

writeToClient(c,0);

//读操作

} else if (io_threads_op == IO_THREADS_OP_READ) {

readQueryFromClient(c->conn);

} else {

serverPanic("io_threads_op value is unknown");

}

}

listEmpty(io_threads_list[id]);

setIOPendingCount(id, 0);

if (tio_debug) printf("[%ld] Done\n", id);

}

}

Redis也部分和Memcached的思想有类似,Memcached也是通过主线程IO多路复用接受连接,并通过一定算法分配到工作线程,这和Redis6的多线程类似。但是,最大最大的不同是,Redis的多线程只是为了处理网络读写,不负责处理具体业务逻辑,命令还是主线程顺序执行的。然而Memcached是Master线程把连接分配给Worker之后,Worker线程就负责把处理后续的所有请求,完全是多线程执行。Redis要想做到这一点,需要做的工作还有很多,尤其是线程安全方面。据说或许要逐步加Key-level的锁,肯定是不断地完善的。

参考资料:

The little-known feature of Redis 4.0 that will speed up your applications

微信分享/微信扫码阅读